Evaluating performance is an important part of any machine learning algorithm.

Unfortunately, evaluating performance is hard. Moreover, the strategies for evaluating performance are different for each algorithm.

In this tutorial, you will learn how to measure performance for the type of supervised machine learning algorithms called classification problems.

Table of Contents

You can skip to a specific section of this Python machine learning tutorial using the table of contents below:

- Evaluating the Performance of Machine Learning Classification Algorithms

- Principles of Classification Performance Measurement

- An Example Classification Problem

- Classification Performance Metric #1: Accuracy

- Classification Performance Metric #2: Recall

- Classification Performance Metric #4: F1-Score

- The Confusion Matrix

- Final Thoughts

Evaluating the Performance of Machine Learning Classification Algorithms

In the last lesson, you learned how we divided a typical machine learning data set into three groups:

- Training Data

- Validation Data

- Test Data

We use the third set - the test data - to calculate performance metrics.

The key classification performance metrics that you need to understand are:

- Accuracy

- Recall

- Precision

- F1-Score

We will explore each metric in this tutorial. First, let's discuss the broad principles of machine learning performance measurement for classification problems.

Principles of Classification Performance Measurement

In any machine learning classification problem, you can only have one of two outcomes:

- Your model was correct in its prediction

- Your model was incorrect in its prediction

All classification performance metrics build on this core principle.

Fortunately, this correct vs. incorrect dichotomy expands to situations where you can classify results into more than two classes.

Keep this in mind through the rest of this tutorial as we will assume a binary classification situation (with only two classes).

An Example Classification Problem

As we move on to learning about the different performance metrics used in classification problems, it is helpful to have a sample problem to use.

I wanted to provide a sample problem because of this.

Imagine that you are in charge of an insurance company's claims department. Your insurance customers can submit a photo of their cars after motor vehicle accidents using the company's mobile app. This has created a large data set of car photos that your team will be performing machine learning classification on.

A group of employees have categorized this data set. More specifically, they have labelled each photo as either (1) a write-off or (2) repairable. The goal is to create a classification algorithm that makes the repair-or-replace decision. This will save money by allowing you to reduce the size of your insurance claims department.

With this example in mind, let's move on to exploring the different performance metrics used in classification problems.

Classification Performance Metric #1: Accuracy

Accuracy is one of the most commonly-used performance metrics for classification problems. It is also one of the easiest to understand.

In classification problems, we define accuracy as the number of correct predictions made by the model divided by the total number of predictions.

Let's consider an example. If your insurance claims classification algorithm made 10000 predictions and 7000 of them were correct, then your model's accuracy is 70%.

Accuracy as a performance metric works best when the data set's classes are similar in size. In our example, this would mean that we have a similar number of images for both written-off vehicles and repairable vehicles.

To understand why, imagine that the data set contains 90% write-offs. This means that only 10% of the cars in the image data set were repairable.

A very simple model that always predicts "write-off" would already have a 90% accuracy rate on this data set.

Because of this, other performance metrics (like those discussed next) are necessary.

Classification Performance Metric #2: Recall

In machine learning, recall is the ability of a model to find all relevant cases within a data set.

The precise definition of recall is below:

"The number of true positive divided by (the number of true positives plus the number of false negatives)".

We will learn more about the meaning of true positive later in this tutorial.

Classification Performance Metric #3: Precision

In machine learning, precision is the ability of a classification model to identify only the relevant data points.

The real definition of precision is below:

"The number of true positives divided by (the number of true positives plus the number of false positives)"

The Trade-Off Between Recall and Precision

In classification problems, there often exists a trade-off between recall and precision.

While recalls measures the ability to find all relevant instances in a dataset, precision measures the data points that our model says were relevant that actually are relevant.

To find a proper balance between precision and recall, we use the F1-Score.

Classification Performance Metric #4: F1-Score

In machine learning, we use the F1-Score to find the optimal blend of precision and recall. The F1-Score is the harmonic mean of the precision and recall scores.

More specifically, here is the formula used to calculate the F1-Score:

We use the harmonic mean to calculate the F1-Score because of its tendency to punish extreme values.

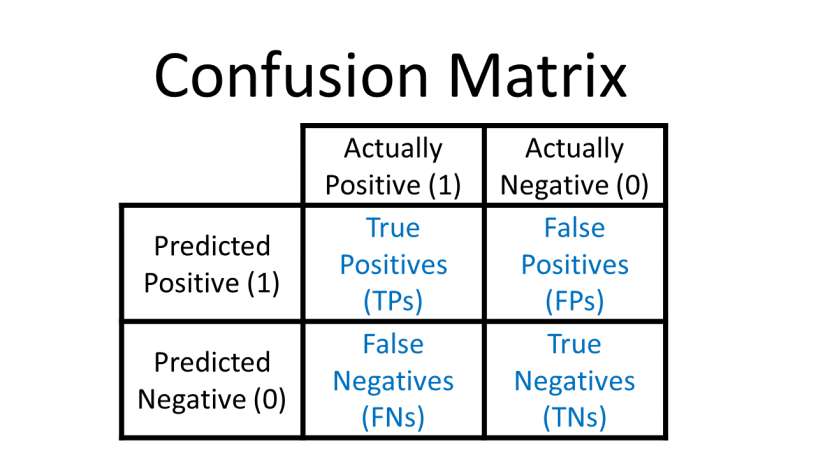

The Confusion Matrix

Machine learning practitioners often refer to a diagram called the confusion matrix to help understand the value assigned to an observation in a data set.

Here is an example of a confusion matrix:

There is a lot of information in this table.

The main point to remember is that the confusion matrix (and the performance metrics discussed in this tutorial) are just ways of comparing predicted values to true values. The "best" metric to use is highly situation-dependent.

Final Thoughts

In this tutorial, you learned about the various performance metrics used in machine learning classification problems.

Here is a brief summary of what you learned:

- The four key performance metrics for machine learning classification problems: accuracy, recall, precision, and F1-score

- How insurance claims photographs are a good example of a machine learning classification problem

- The trade-off between recall and precision

- How the F1-score aims to find a good balance between recall and precision

- Why we use a confusion matrix to keep track of false and true positives